Task: Reach Red Target

Task: Reach Red Target

In top-5 among 46 projects in the course @ CMU 11-785 - Introduction to Deep Learning (Fall 2020) - Project Gallery

Intro

Reinforcement learning (RL) is a powerful learning technique that enables agents to learn useful policies by interacting directly with the environment. While this training approach doesn’t require labeled data, it is plagued with convergence issues and is highly sample inefficient. The learned policies are often very specific to the task at hand and are not generalizable to similar task spaces.

Meta-Reinforcement Learning is one strategy that can mitigate these issues, enabling robots to acquire new skills much more quickly. Often described as “learning how to learn”, it allows agents to leverage prior experience much more effectively. Recently published papers in Meta Learning show impressive speed and sample efficiency improvements over traditional methods of relearning the task for slight variations in the task objectives. A concern with these Meta Learning methods was that their success was only achieved on relatively small modifications to the initial task.

Another concern in RL, as highlighted by Henderson et al., is reproducibility and lack of standardization of the metrics and approaches, which can lead to wide variations in reported vs observed performances. To alleviate that, benchmarking frameworks such as Meta-World and RLBench have been proposed that establish a common ground for a fair comparison between approaches. In this work, we aim to utilize the task variety proposed by Meta-World and compare PPO, a vanilla policy gradient algorithm, with their Meta Learning counterparts (MAML and Reptile). The objective is to verify the magnitude of success of meta-learning algorithms over the vanilla variants, and test them on a variety of complicated tasks to test their limits in responding to the size of task variations. We also introduce a new technique — Multi-headed Reptile, which proposes a novel approach to address some of the shortcomings of both these Meta-Learning techniques, with some computational implementation suggestions that prevent slowdown.

Results

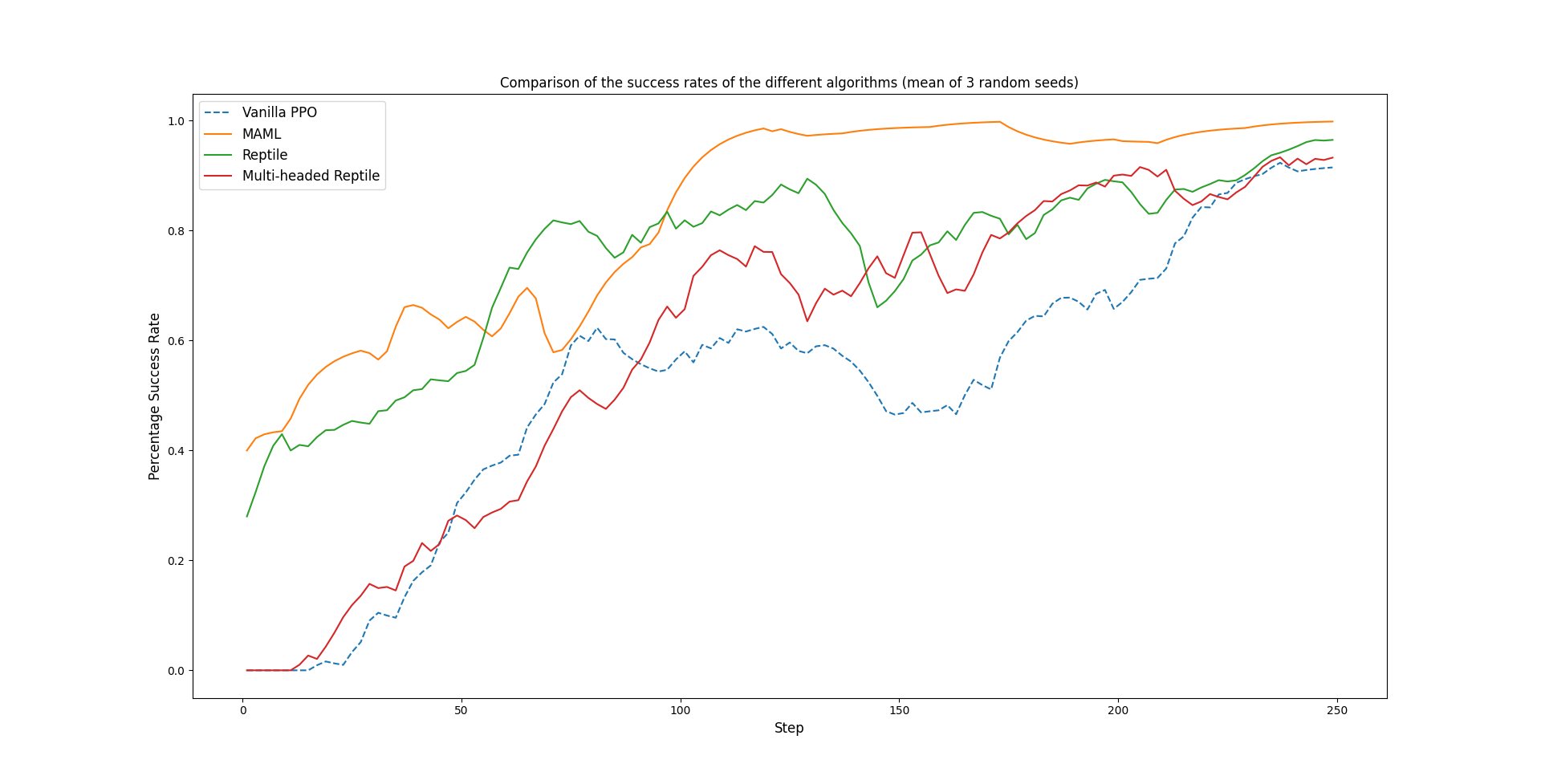

The figure above compares the success rate of each model averaged over the 10 test tasks after initial training on other tasks. MAML and Reptile both converge to 80% success rate within 100 iterations, whereas Multi-headed Reptile and random-initialized (Vanilla PPO) require 150 and 225 iterations respectively.

As theorized, both MAML and Reptile converge significantly more quickly than Vanilla PPO. Interestingly, even in the first iteration, these two meta-learning techniques achieve around 40% success rate, demonstrating their learned behaviour about the nature of the task distribution.

However, we found that Multi-headed Reptile does not work as well as we expected, performing just as well as Vanilla PPO. Although Multi-headed Reptile converged in fewer iterations than Vanilla PPO, its poor initial performance indicates that it failed to initialize weights optimally. As a sanity check, Vanilla PPO indeed has poor initial performance due to randomly initialized weights.

Tools

- RLBench provided the baseline robot environment that we built upon.

- Stablebaselines3 provided the vanilla PPO algorithm.

- Ray provided an easy solution to creating multiple, independent simulation workers to train different copies of the model on different tasks.

- Wandb was critical to help us manage different training runs and keep track of experiments.

Contribution

I first built much of the initial training and evaluation pipeline, eventually getting PPO to train properly on the reach task. Due to the robotic application, I had to restrict the magnitude of the agent's output actions to be physically feasible. See this Git issue for more details. Without this, many of the agents' actions would trigger errors in the simulator's inverse kinematics solver, and initial training would be extremely slow.

I next wrote much of MAML and Reptile. See here for our implementation. I also helped modify our architecture to utilize Ray for parallelization over independent tasks, as in the case of Multi-headed Reptile. See here for hyperparameters.

Thoughts

Meta-learning certainly shows much promise, as indicated by the initial high success rate of MAML and Reptile. However, I'd like to see these algorithms' performance on more complicated tasks besides the basic reach task. These results unfortunately were achieved with a strong reward signal (Euclidean distance of End-effector (EE) from target), and since action space was delta EE position, the model in effect only had to learn the vector from current position to target. I wonder how use of a sparse reward would change performance. For robots to solve any general task in households or factories, rewards should not need to be hand-designed.