Intro

Over the last few years, drones have become increas-ingly popular for both recreational and professional videog-raphy.Vloggers can take breathtaking aerial shots oftheir escapades through mountains and fjords. And duringsearch-and-rescue missions, rescuers can use drone footageto identify victims and monitor their own surroundings tocall for backup if needed. Yet, despite the numerous use-cases, one bottleneck stands in the way when it comes tocapturing drone footage: manual control.

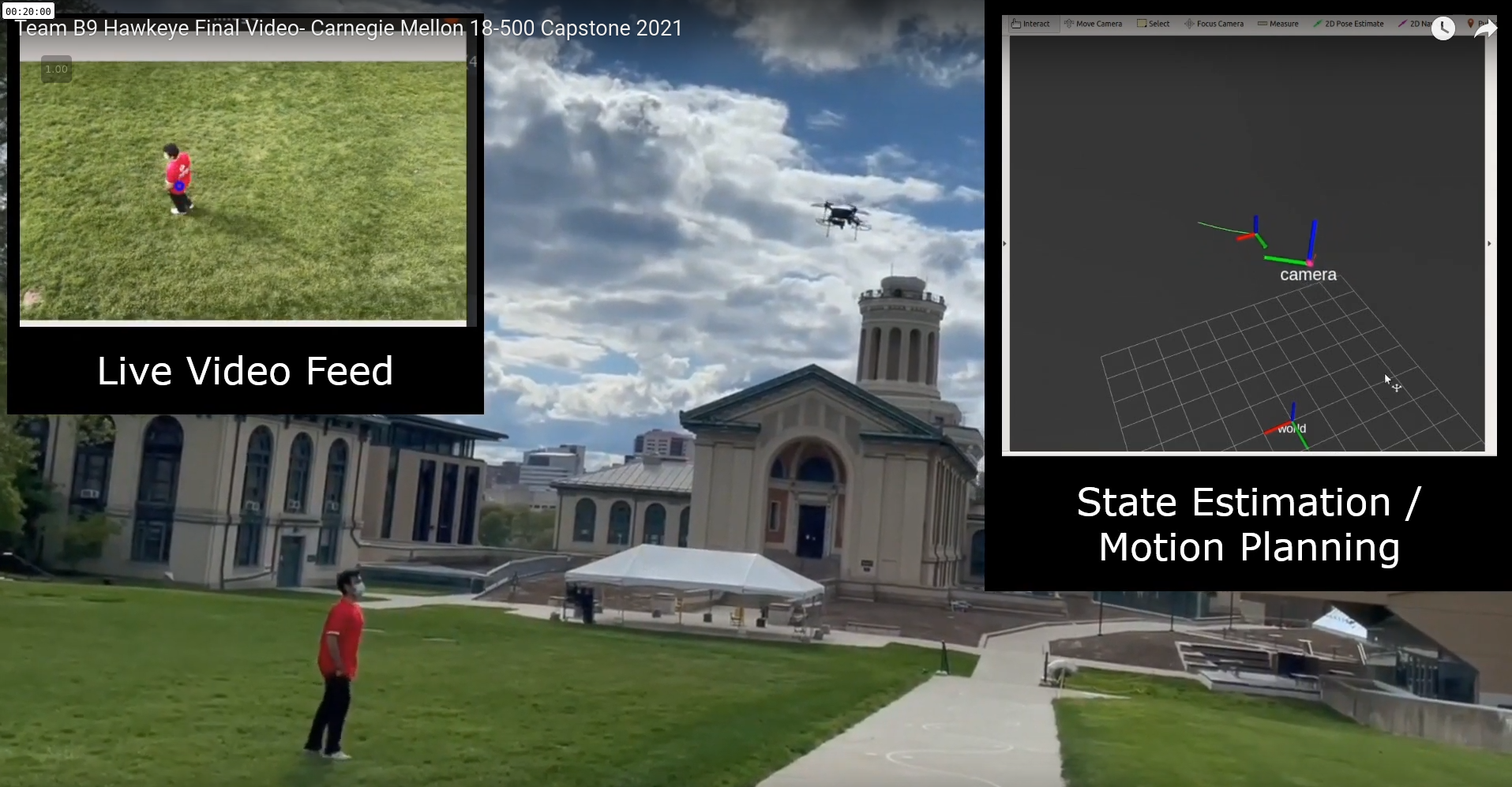

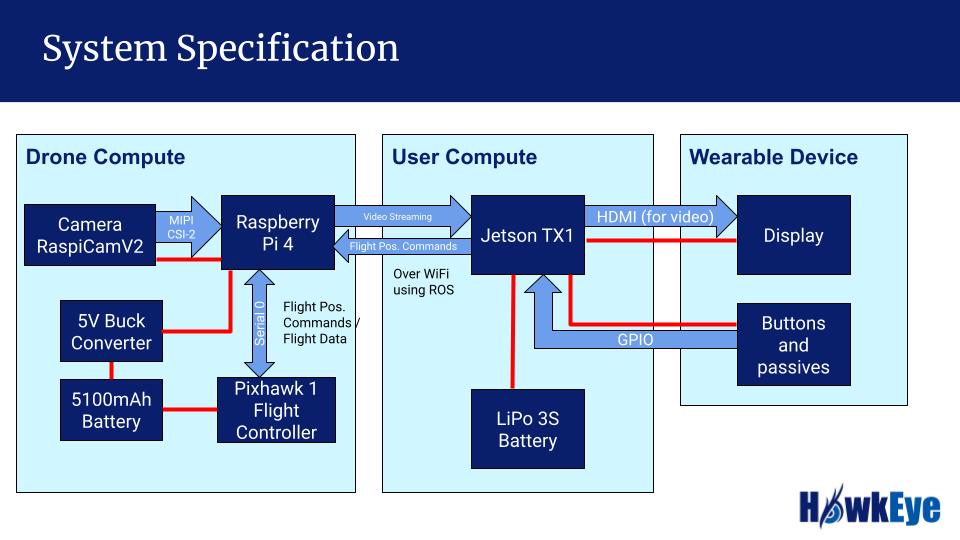

We created a drone capable of tracking target in real time as they move across the ground. Unlike other drones, which require expensive LiDAR or depth cameras, our system only requires a 2D RGB camera and GPS to estimate the 3D position of targets. Our drone also streams live video feed to a display. To maximize battery life of the drone and reduce weight, all computation is be offloaded to a small, portable computer -- in our case, the Jetson TX1.

System Architecture

Target Detection

We require the target to wear a specific colored shirt and apply RGB color thresholding to filter their pixels in an image. This obtains a raw 2D pixel position. Next, using camera intrinsic parameters (ex: focial length) determined through calibration, we transform 2D pixel values to 3D position with respect to the camera's light sensor. Since we built a full-scale CAD model of our drone, we know the exact Cartesian transformation from the camera sensor to our drone's overall pose. Finally, using the drone's own onboard pose estimation, we can define the target's 3D position in real world space. This can be summarized as:

- Image → Camera

- Camera → Drone

- Drone → World

Motion Planning

Now that we have the target's position, we can use this to plan the drone's motion. This involves three key steps:

- Drone Dynamics Model: We first need to define a model of the drone’s state and how control inputs affect this state. We represent the drone’s state as simple 2D position [x, y] with an assumed fixed height of 8m. We define control input as horizontal velocity: This means that we cannot rotate the drone to face a target, but must move horizontally. While these assumptions indeed limit our control of the drone, this behavior fits our use-case: by maintaining sufficient distance from the target, the drone does not need much agility. These simple dynamics also allow our computation to meet the required rate specified by the drone’s flight controller.

-

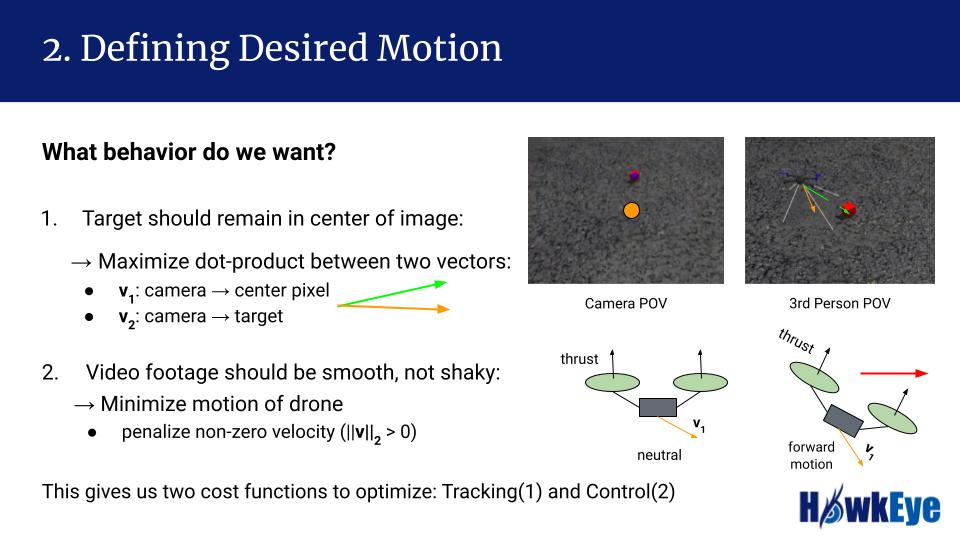

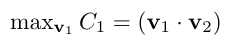

Define Desired Behavior: Next, we need to define some behavior for the drone. We firstly want the

drone to keep the camera focused on the target,meaning the target should stay in the center of the image.

This objective can be represented as a maximization of the dot-product between two vectors:

where v1 is the fixed vector from the camera center to the center pixel on the image plane and

v2 is the dynamic vector from the drone’s camera to the 3D target position, obtained from the

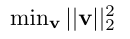

target detection pipeline. Second, we optimize for smooth, stable video footage,and this can be achieved

by minimizing the motion of the drone represented in terms of velocity control input:

where v1 is the fixed vector from the camera center to the center pixel on the image plane and

v2 is the dynamic vector from the drone’s camera to the 3D target position, obtained from the

target detection pipeline. Second, we optimize for smooth, stable video footage,and this can be achieved

by minimizing the motion of the drone represented in terms of velocity control input:

where v = [vx, vy] is the velocity control input. We optimize these two objectives over a time

horizon of 1 second with a step size of dt= 0.1 seconds. We use the predicted target trajectory inside

this optimization. We have tuned weights for the above two costs to achieve desired behavior.

where v = [vx, vy] is the velocity control input. We optimize these two objectives over a time

horizon of 1 second with a step size of dt= 0.1 seconds. We use the predicted target trajectory inside

this optimization. We have tuned weights for the above two costs to achieve desired behavior.

- Solve for Behavior: Now that we have defined our formulation, we can solve this using an optimization package. In our case, we use the Scipy Optimization package. We use a Model Predictive Control formulation to optimize over multiple timesteps, but only execute the first step of this plan. Every iteration, we generate an entire trajectory, but execute onlythe first step. This ensures our motion is not only optimal, but is reactive to position drift and external disturbances like wind due to the feedback loop planning

Thoughts

Though we only had a few months to complete project, I really want to extend how the target interacts with the drone. Rather than purely following the target, the target should be able to use hand gestures to control the drone. By waving one's arm from left to right, the drone should perform a fast, panoramic sweep from the left side of the target to the right and pull back up.